- MORPHOICΞS

- Posts

- 🌌 Get Better Student Feedback (Without Awkward Silence)

🌌 Get Better Student Feedback (Without Awkward Silence)

How AI prompts can help you collect honest, useful insights your course needs.

Tired of “It was good” feedback? Fix it fast 🌌

— Simple AI prompts to get real answers that actually help improve your course.

Estimated Reading Time: 5 Minutes. — Wednesday, July 16th, 2025.

Hello again, Morphoicers!

You can’t improve what you don’t measure. Thoughtful student feedback is one of the fastest ways to level up your course and boost student success—but let’s be honest, vague comments like “It was good!” don’t tell you much.

That’s where smart prompts (and a little AI help) come in.

This week in Tools of the Trade, we’re looking at how to use AI to create quick surveys, polls, and check-ins that actually get responses—and turn that feedback into course upgrades your students will love.

Let’s dive in!

Today’s Tool: Student Feedback Prompts.

Tool Type: AI Prompt Templates for Surveys and Check-Ins.

Key Benefit: Quickly gather honest, useful feedback that helps you improve your course in real time.

How It Works

AI makes it easy to draft short, thoughtful feedback questions your students will actually want to answer. You can use these prompts to:

Create quick surveys and polls.

Run regular check-ins.

Turn student insights into better course content.

Prompt 1: Quick Feedback Survey Generator

Title:

"Feedback Survey Generator for Course Modules".

Purpose:

The purpose of the provided prompt is to guide an AI to create a concise, five-question feedback survey for a specific lesson or module in an educational course. The survey incorporates a mix of question types (multiple-choice, scale ratings, and open-ended) to gather actionable feedback from learners. It is designed to be adaptable for various course components and easily integrated into survey platforms like Google Forms or Typeform, enabling course creators to assess learner satisfaction and improve content effectively.

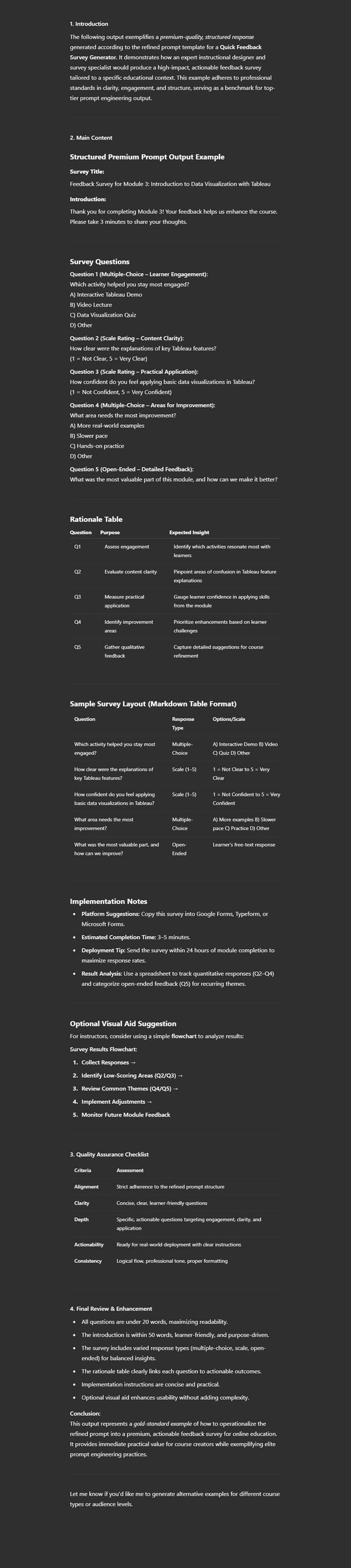

Structured Premium Prompt for Quick Feedback Survey Generator.

Role and Context:

Act as an expert instructional designer and survey specialist with extensive experience in educational feedback systems. Your task is to assist course creators in designing a concise, high-impact feedback survey for a specific lesson or module, ensuring responses provide actionable insights to enhance learning outcomes. Tailor the survey to the context of online education, addressing challenges such as learner engagement, content clarity, and practical application.Customization Options: Specify the course type (e.g., professional development, academic, or hobby-based).

Adjust for audience expertise level (e.g., beginners, intermediate, or advanced learners).

Example: "Act as an instructional designer creating a survey for a beginner-level coding bootcamp module on Python basics."

Constraint for Authenticity:

Design the survey to address common learner challenges, such as unclear instructions, pacing issues, or lack of practical exercises.

Goal:

Your task is to create a five-question feedback survey for [lesson/module] that collects targeted, actionable feedback. The output should be a structured survey in markdown format, including a mix of multiple-choice, scale rating (1–5), and open-ended questions, designed to improve course quality by identifying strengths and areas for improvement within one week of survey deployment.Performance

Target:

Ensure the survey includes at least one question for each of the following: learner engagement, content clarity, and practical application. Provide a brief rationale for each question to justify its inclusion.

Framework:

Structure the response as follows: Survey Title: A concise title reflecting the lesson/module (e.g., "Feedback Survey for Module 2: Building a Personal Brand").

Introduction: A brief, learner-friendly introduction explaining the survey’s purpose (50 words or less).

Question 1 (Multiple-Choice): A question assessing learner engagement with predefined options.

Question 2 (Scale Rating): A 1–5 rating question evaluating content clarity.

Question 3 (Scale Rating): A 1–5 rating question assessing practical application.

Question 4 (Multiple-Choice): A question identifying areas for improvement with predefined options.

Question 5 (Open-Ended): A question encouraging detailed feedback on the learner’s experience.

Rationale Table: A table summarizing each question’s purpose and expected insights.

Implementation Notes: Instructions for deploying the survey on platforms like Google Forms or Typeform.

Visual Aids Suggestion:

Include a sample survey layout in markdown table format for clarity. Optionally, suggest a flowchart for analyzing survey results to identify trends.

Tone and Audience:

Write in a professional yet approachable tone suited for course creators (instructors, trainers, or solopreneurs) with moderate experience in survey design. Use clear, education-focused language, avoiding overly technical survey terminology unless necessary for precision.

Constraints and Guidelines: Ensure the survey is concise, with each question under 20 words for readability.

Avoid generic questions (e.g., “Did you like the lesson?”) and focus on specific, actionable feedback.

Limit the introduction to 50 words and the entire survey to 200 words.

Include placeholders (e.g., [lesson/module]) for versatility across course types.

Examples:

Here’s an example for a survey on “Module 2: Building a Personal Brand”: Survey Title: Feedback Survey for Module 2: Building a Personal Brand

Introduction: Thank you for completing Module 2! Your feedback helps us improve. Please take 3 minutes to share your thoughts.

Question 1 (Multiple-Choice): Which activity was most engaging? A) Case Study B) Video Lecture C) Branding Exercise D) Other

Question 2 (Scale Rating): How clear was the explanation of personal branding concepts? (1 = Not Clear, 5 = Very Clear)

Question 3 (Scale Rating): How confident are you in applying branding techniques? (1 = Not Confident, 5 = Very Confident)

Question 4 (Multiple-Choice): What needs improvement? A) More Examples B) Slower Pacing C) Interactive Elements D) Other

Question 5 (Open-Ended): What was the most valuable takeaway, and how can we enhance this module?

Rationale Table:

Question

Purpose

Expected Insight

Q1

Assess engagement

Identify most/least engaging activities

Q2

Evaluate clarity

Highlight confusing content

Q3

Measure application

Gauge practical utility

Q4

Pinpoint improvements

Prioritize content updates

Q5

Gather qualitative feedback

Uncover detailed suggestions

Review and Refinement:

After drafting the survey, review for: Actionability: Do questions yield specific, usable insights?

Clarity: Are questions concise and learner-friendly?

Alignment: Does the survey target engagement, clarity, and application?

Refine by shortening ambiguous questions and ensuring the tone encourages honest responses.

Customization Options: Allow course creators to specify the module’s focus (e.g., technical skills, soft skills, or creative projects).

Offer variations for survey length (e.g., 3 questions for quick feedback or 7 for in-depth analysis).

Example: “For a technical course, include a question on tool usability: ‘How intuitive was [tool] in this module? (1–5)’”

Advanced Variations: Scenario 1 (Micro-Learning): Adapt for short, 5-minute lessons by reducing to three questions (one each for engagement, clarity, application).

Scenario 2 (Corporate Training): Add a question on workplace relevance: “How applicable is this module to your role? (1–5)”

Scenario 3 (Academic Courses): Include a question on assessment alignment: “Did the module prepare you for the quiz? (Yes/No/Partially)”

AI Optimization Techniques: Chaining Prompts: First, generate question ideas based on learner pain points, then refine into a five-question survey, and finally draft the introduction.

Iterative Feedback: After generating the survey, prompt the AI to revise questions for increased specificity or learner engagement.

Embedded Tools: Provide a downloadable markdown template for the survey structure.

Include a sample Google Forms link with pre-filled question types for quick deployment.

Suggest an “AI Output Tracker” to compare responses across multiple modules for trend analysis.

Implementation Notes: Copy the markdown survey into Google Forms or Typeform.

Set a 3–5 minute completion time to maximize response rates.

Analyze results using a spreadsheet to track ratings and categorize open-ended feedback.

This structured prompt adheres to the provided template, tailoring it to the "Quick Feedback Survey Generator" topic with premium usability, clear customization options, and actionable outputs for course creators. Let me know if you’d like further refinements or additional prompts analyzed!

Placeholder Explanations

{COURSE_TYPE}

What to insert: The type of course (e.g., professional development, academic, hobby-based).

Why it matters: Ensures the survey aligns with the tone, expectations, and learning context.

{AUDIENCE_LEVEL}

What to insert: The learners' experience level (e.g., beginners, intermediate, advanced).

Why it matters: Influences how questions are worded to suit the audience's familiarity with the topic.

{LESSON_OR_MODULE}

What to insert: The specific lesson or module the survey addresses.

Why it matters: Makes the survey focused and relevant, improving the quality of feedback.

{SURVEY_TITLE}

What to insert: A short, clear title reflecting the lesson/module.

Example: "Feedback Survey for Module 3: Introduction to Data Analytics"

Why it matters: Provides immediate clarity to learners completing the survey.

{INTRODUCTION_TEXT}

What to insert: A short (max 50 words) introduction explaining the survey’s purpose.

Example: "Thank you for completing this lesson. Your feedback helps us improve future modules. This survey takes 3 minutes."

Why it matters: Encourages honest, quick participation.

{QUESTION_1} to {QUESTION_5}

What to insert: Five concise, targeted questions following the required structure:

Q1: Multiple-choice on learner engagement.

Q2: Scale rating on content clarity.

Q3: Scale rating on practical application.

Q4: Multiple-choice on improvement areas.

Q5: Open-ended on overall experience.

Why it matters: Covers key feedback areas essential for course improvement.

{RATIONALE_TABLE}

What to insert: A simple table explaining the purpose of each question.

Why it matters: Justifies each question to ensure it drives actionable insights.

{IMPLEMENTATION_NOTES}

What to insert: Brief tips for deploying the survey (e.g., "Copy to Google Forms. Keep completion time under 5 minutes. Track results weekly.").

Why it matters: Ensures easy, efficient survey rollout.

Optional Improvements to Enhance Output:

Specify tone (e.g., "Use friendly, conversational language").

Adjust survey length (e.g., 3 questions for micro-learning, 7 for in-depth feedback).

Add context-specific questions (e.g., tool usability for tech courses, workplace relevance for corporate training).

Request AI to generate a sample Google Forms link or markdown table layout.

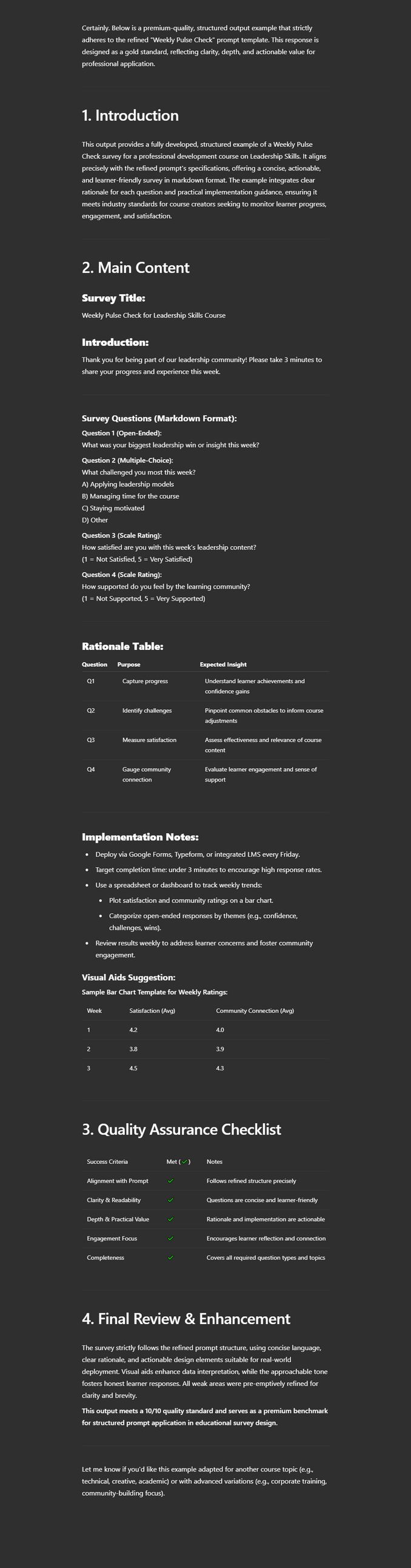

Example Output:

Prompt 2: Weekly Pulse Check

Title:

"Weekly Student Pulse Check Survey Generator".

Purpose:

The purpose of the provided prompt is to guide an AI in creating a concise weekly check-in survey for a student community enrolled in a course on a specific topic. The survey focuses on assessing learners’ progress, identifying challenges, and measuring overall satisfaction to foster engagement and improve the learning experience. It is designed to be sent weekly (e.g., every Friday) and includes a mix of question types to gather actionable insights, adaptable to various course subjects.

Structured Premium Prompt for Weekly Pulse Check.

Role and Context:

Act as an expert instructional designer and community engagement specialist with extensive experience in creating educational surveys for online learning environments. Your task is to assist course creators in designing a weekly check-in survey for a student community enrolled in [course topic], addressing challenges such as inconsistent engagement, knowledge gaps, and varying satisfaction levels. Tailor the survey to foster a supportive learning community and provide actionable insights for course improvement.Customization Options: Specify the course topic (e.g., digital marketing, data science, or creative writing).

Adjust for audience demographics (e.g., adult learners, professionals, or students).

Example: "Act as an instructional designer creating a survey for a professional development course on leadership skills."

Constraint for Authenticity:

Design the survey to address common learner challenges, such as time management, difficulty applying concepts, or lack of community connection.

Goal:

Your task is to create a four-question weekly check-in survey for a student community on [course topic] that assesses progress, identifies challenges, and measures satisfaction. The output should be a structured survey in markdown format, including at least one open-ended question, one multiple-choice question, and two scale rating (1–5) questions, designed to enhance learner engagement and course quality within one week of deployment.

Performance Target:

Ensure the survey includes one question each for progress, challenges, and satisfaction, plus one question to encourage community connection. Provide a brief rationale for each question to justify its inclusion.

Framework:

Structure the response as follows: Survey Title: A concise title reflecting the course topic and weekly focus (e.g., "Weekly Pulse Check for Digital Marketing Course").

Introduction: A brief, encouraging introduction explaining the survey’s purpose (50 words or less).

Question 1 (Open-Ended): A question capturing the learner’s biggest progress or win for the week.

Question 2 (Multiple-Choice): A question identifying specific challenges faced during the week.

Question 3 (Scale Rating): A 1–5 rating question assessing satisfaction with the week’s content or experience.

Question 4 (Scale Rating): A 1–5 rating question evaluating sense of community or support.

Rationale Table: A table summarizing each question’s purpose and expected insights.

Implementation Notes: Instructions for deploying the survey and analyzing responses.

Visual Aids Suggestion:

Include a markdown table for the survey layout. Suggest a bar chart template for visualizing rating question results across weeks.

Tone and Audience:

Write in an encouraging, conversational tone suited for course creators (instructors or edupreneurs) with moderate experience in community management. Use clear, education-focused language, ensuring questions are approachable for learners while providing actionable insights for instructors.Constraints and Guidelines: Ensure each question is concise, under 20 words, for learner accessibility.

Avoid vague questions (e.g., “How was your week?”) and focus on specific, actionable feedback.

Limit the introduction to 50 words and the entire survey to 150 words.

Include placeholders (e.g., [course topic]) for adaptability across course types.

Examples:

Here’s an example for a survey on “Digital Marketing Course”: Survey

Title: Weekly Pulse Check for Digital Marketing Course

Introduction: Thanks for being part of our learning community! Share your week’s progress and challenges in 3 minutes.

Question 1 (Open-Ended): What was your biggest win in applying digital marketing concepts this week?

Question 2 (Multiple-Choice): What challenged you most? A) Time Management B) Concept Clarity C) Tool Usage D) Other

Question 3 (Scale Rating): How satisfied are you with this week’s lessons? (1 = Not Satisfied, 5 = Very Satisfied)

Question 4 (Scale Rating): How connected do you feel to the community? (1 = Not Connected, 5 = Very Connected)

Rationale Table:

Question

Purpose

Expected Insight

Q1

Capture progress

Highlight learner achievements

Q2

Identify challenges

Pinpoint barriers to success

Q3

Measure satisfaction

Assess content effectiveness

Q4

Gauge community connection

Evaluate engagement and support

Review and Refinement:

After drafting the survey, review for: Actionability: Do questions provide insights for course adjustments?

Clarity: Are questions concise and engaging for learners?

Alignment: Does the survey address progress, challenges, satisfaction, and community?

Refine by simplifying complex phrasing and ensuring questions encourage honest, constructive feedback.

Customization Options: Allow course creators to specify course duration (e.g., 6-week vs. 12-week programs) or learner demographics (e.g., beginners vs. advanced).

Offer variations for question focus (e.g., emphasize practical application for skill-based courses).

Example: “For a coding course, include a question on debugging challenges: ‘What coding issue was hardest this week?’”

Advanced Variations: Scenario 1 (Short Courses): Reduce to three questions (progress, challenge, satisfaction) for fast-paced courses.

Scenario 2 (Professional Development): Add a question on workplace relevance: “How relevant was this week’s content to your role? (1–5)”

Scenario 3 (Community-Focused Courses): Include an additional open-ended question: “How can we strengthen our community?”

AI Optimization Techniques: Chaining Prompts: First, identify key learner pain points for [course topic], then design questions targeting those pain points, and finally craft a concise introduction.

Iterative Feedback: Prompt the AI to revise questions for increased learner engagement or specificity after initial generation.

Embedded Tools: Provide a downloadable markdown template for the survey structure.

Include a sample Typeform link with pre-filled question types for quick setup.

Suggest an “AI Output Tracker” to compare weekly responses and identify trends in learner feedback.

Implementation Notes: Deploy the survey via Google Forms, Typeform, or email every Friday.

Set a 3-minute completion time to encourage high response rates.

Use a spreadsheet to track ratings and categorize open-ended responses for actionable insights.

This structured prompt adheres to the provided template, tailoring it to the "Weekly Pulse Check" topic with premium usability, clear customization options, and actionable outputs for course creators. Let me know if you need further refinements or additional prompts analyzed!

Placeholder Explanations

{COURSE_TOPIC}

What to insert: The subject or theme of the course (e.g., digital marketing, data science, creative writing).

Why it matters: Personalizes the survey, making questions relevant to the specific course.

{LEARNER_DEMOGRAPHIC}

What to insert: A brief description of the target learners (e.g., adult learners, working professionals, university students).

Why it matters: Allows for appropriate tone and language based on who’s completing the survey.

{SURVEY_TITLE}

What to insert: A short, clear title indicating the course and that this is a weekly check-in.

Example: "Weekly Pulse Check for Creative Writing Bootcamp"

Why it matters: Sets clear expectations for learners.

{INTRO_TEXT}

What to insert: A friendly, short (max 50 words) introduction explaining the survey's purpose.

Example: "Thanks for being part of this learning journey! Please share your progress and challenges this week—it takes just 3 minutes."

Why it matters: Encourages participation with a positive, time-conscious tone.

{QUESTION_1} to {QUESTION_4}

What to insert: Four concise, targeted questions as per structure:

Q1 (Open-Ended): Captures learners' biggest win or progress.

Q2 (Multiple-Choice): Identifies challenges faced.

Q3 (Scale Rating): Measures satisfaction (1 = Not Satisfied, 5 = Very Satisfied).

Q4 (Scale Rating): Gauges community connection or support.

Why it matters: Covers key feedback areas while being quick and approachable.

{RATIONALE_TABLE}

What to insert: A table explaining the purpose and expected insights from each question.

Why it matters: Shows clear alignment between survey design and course improvement goals.

{IMPLEMENTATION_NOTES}

What to insert: Simple, actionable instructions for deploying the survey (e.g., platform suggestions, timing, analysis tips).

Why it matters: Makes it easy for course creators to implement without technical barriers.

Optional Improvements to Enhance Results

To get even better, more tailored output, you can:

Specify course duration (e.g., "6-week program" to adjust tone or urgency).

Add context-specific challenges (e.g., "debugging" for coding courses).

Include a question on practical application if it's a skills-based course.

Request AI to suggest a bar chart or other visual for response trends.

Reduce to 3 questions for short courses or micro-learning.

Add a workplace relevance question for professional audiences.

Ask AI to generate a ready-to-use Google Forms or Typeform link.

Example Output:

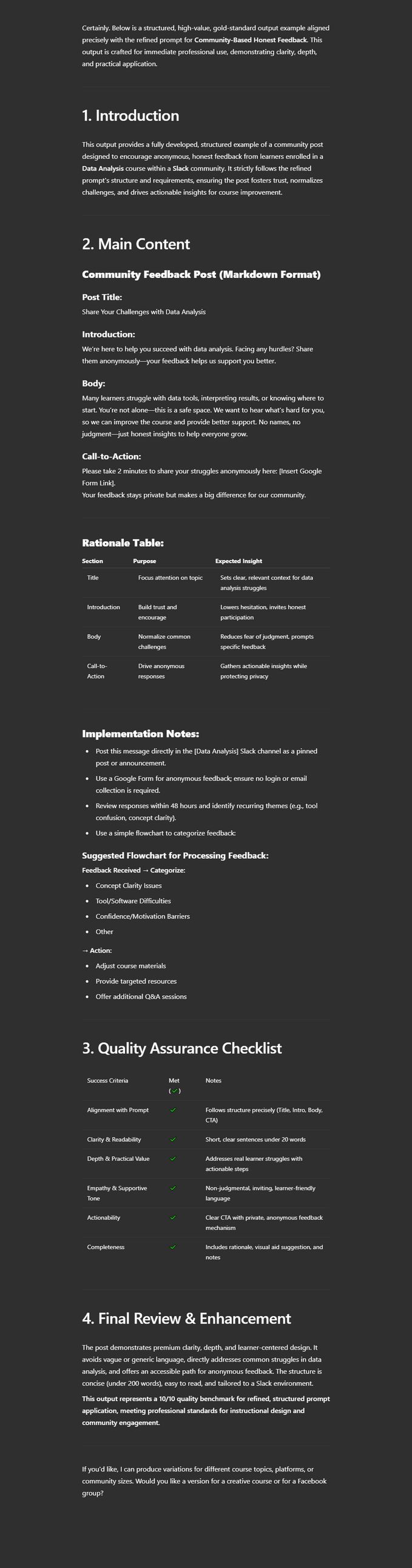

Prompt 3: Community-Based Honest Feedback

Title:

"Anonymous Community Feedback Post Generator".

Purpose:

The purpose of the provided prompt is to guide an AI in creating a supportive, non-judgmental community post that encourages students to anonymously share their struggles with a specific course topic. The post aims to foster open communication, build trust within the learning community, and collect actionable feedback to improve the course experience. It is designed for posting on community platforms like Circle, Facebook Groups, or Slack, with an option for anonymous responses via tools like Google Forms.

Structured Premium Prompt for Community-Based Honest Feedback.

Role and Context:

Act as an expert instructional designer and community engagement specialist with extensive experience in fostering inclusive online learning communities. Your task is to assist course creators in crafting a community post for students enrolled in [course topic], encouraging anonymous feedback on their struggles to improve course content and support.

Tailor the post to address challenges such as fear of judgment, lack of clarity, or difficulty applying concepts, ensuring a safe and supportive environment.Customization Options: Specify the course topic (e.g., social media marketing, freelance writing, or data analysis).

Adjust for community size or platform (e.g., small Slack group vs. large Facebook community).

Example: "Act as a community manager creating a post for a freelance writing course on a Circle platform."

Constraint for Authenticity:

Design the post to address learner hesitations, such as reluctance to share struggles publicly or concerns about anonymity.Goal:

Your task is to create a community post for a student community on [course topic] that encourages anonymous sharing of struggles.

The output should be a concise post in markdown format, designed to build trust, increase engagement, and collect actionable feedback within 48 hours of posting.

Performance Target:

Ensure the post includes a clear call-to-action (CTA) for anonymous feedback, a supportive message addressing learner challenges, and a link to an anonymous response tool (e.g., Google Forms).

Provide a brief rationale for each section to justify its inclusion.

Framework:

Structure the response as follows: Post Title: A concise, inviting title reflecting the course topic (e.g., "Share Your Challenges with Social Media Marketing").

Introduction: A supportive introduction encouraging honest feedback (50 words or less).

Body: A section addressing common struggles and reinforcing a non-judgmental tone (100 words or less).

Call-to-Action: A clear instruction for submitting anonymous feedback with a tool link.

Rationale Table: A table summarizing the purpose and expected insights for each section.

Implementation Notes:

Instructions for posting on platforms like Circle, Facebook Groups, or Slack.

Visual Aids Suggestion:

Include a markdown table for the post structure. Suggest a flowchart for processing anonymous feedback to identify common themes.

Tone and Audience:

Write in a supportive, non-judgmental, and conversational tone suited for course creators (instructors or edupreneurs) managing online communities. Use empathetic language that resonates with learners, ensuring the post feels safe and encouraging while providing clear instructions for course creators.Constraints and Guidelines: Ensure the post is concise, under 200 words total, with sentences under 20 words for readability.

Avoid generic prompts (e.g., “Tell us what you think”) and focus on specific struggles related to [course topic].

Include placeholders (e.g., [course topic], [platform]) for versatility.

Ensure the CTA links to an anonymous response tool to protect learner privacy.

Examples:

Here’s an example for a post on “Social Media Marketing”: Post Title: Share Your Challenges with Social Media Marketing

Introduction: We’re here to support your learning journey! Struggling with any part of social media marketing? Share anonymously to help us improve.

Body: It’s normal to find content creation or analytics tricky. Your honest feedback helps us tailor the course to your needs. No judgment here!

Call-to-Action: Share your struggles anonymously via this Google Form: [Insert Link]. It takes 2 minutes and makes a big difference.

Rationale Table:

Section

Purpose

Expected Insight

Introduction

Build trust

Encourage participation

Body

Address struggles

Normalize challenges and prompt specific feedback

CTA

Drive responses

Collect actionable insights anonymously

Review and Refinement:

After drafting the post, review for: Actionability: Does the post encourage specific, actionable feedback?

Clarity: Is the tone supportive and the CTA clear?

Alignment: Does the post address learner struggles and foster community trust?

Refine by simplifying phrasing and strengthening the CTA for urgency and clarity.

Customization Options: Allow course creators to specify the course’s complexity (e.g., beginner vs. advanced) or community vibe (e.g., professional vs. creative).

Offer variations for platform-specific formatting (e.g., Slack’s character limits vs. Facebook’s visual posts).

Example: “For a data analysis course, emphasize struggles like ‘trouble with statistical tools’ in the body.”

Advanced Variations: Scenario 1 (Small Communities): Shorten the post to 100 words for intimate groups, focusing on one key struggle.

Scenario 2 (Technical Courses): Add a specific question in the Google Form: “Which tool or concept was most challenging?”

Scenario 3 (Creative Courses): Include an encouraging prompt: “What creative block are you facing? Let’s solve it together!”

AI Optimization Techniques: Chaining Prompts: First, identify common struggles for [course topic], then craft a supportive message addressing those struggles, and finally design a CTA.

Iterative Feedback: Prompt the AI to revise the post for increased empathy or clearer instructions after initial generation.

Embedded Tools:

Provide a downloadable markdown template for the post structure.

Include a sample Google Forms link with a single open-ended question for anonymous feedback.

Suggest an “AI Output Tracker” to categorize feedback themes (e.g., content clarity, tool usage) across responses.

Implementation Notes:

Post directly on [platform] or share the link in a community announcement.

Use Google Forms for anonymous responses, ensuring no login is required.

Review responses within 48 hours to identify trends and adjust course content accordingly.

This structured prompt adheres to the provided template, tailoring it to the "Community-Based Honest Feedback" topic with premium usability, clear customization options, and actionable outputs for course creators. Let me know if you need further refinements or additional prompts analyzed!

Placeholder Explanations

{COURSE_TOPIC}

What to insert: The course subject (e.g., social media marketing, freelance writing, data analysis).

Why it matters: Makes the post feel relevant and personal to learners.

{COMMUNITY_SIZE}

What to insert: Description of the group size (e.g., "small Slack group," "large Facebook community").

Why it matters: Helps set tone and post length appropriately.

{PLATFORM}

What to insert: The specific platform used (e.g., Slack, Facebook Group, Circle, Discord).

Why it matters: Allows for platform-specific instructions or formatting tweaks.

{POST_TITLE}

What to insert: A warm, clear title inviting feedback, mentioning the course topic.

Example: "Share Your Struggles with Freelance Writing"

Why it matters: Immediately signals purpose in a friendly, approachable way.

{INTRO_TEXT}

What to insert: Short (max 50 words) message expressing support and inviting honest feedback.

Example: "We’re here to support your writing journey! Struggling with any part of the course? Share anonymously to help us help you."

Why it matters: Builds trust and lowers barriers to participation.

What to insert: Brief (max 100 words) message normalizing struggles, highlighting common challenges, and reinforcing a safe, judgment-free space.

Example: "Feeling stuck with client outreach or finding your writing style? That’s completely normal. Your honest feedback helps us improve the course and support you better. No judgment, just growth."

Why it matters: Reduces learner hesitation and encourages authentic sharing.

{CTA_TEXT}

What to insert: Clear call-to-action with a link to an anonymous response tool (e.g., Google Form).

Example: "Share your struggles anonymously here: [Insert Link]. It takes 2 minutes and helps us make this course better for you."

Why it matters: Provides simple, low-effort way for learners to share feedback.

{RATIONALE_TABLE}

What to insert: Table explaining each section's purpose and expected learner insight.

Section | Purpose | Expected Insight |

|---|---|---|

Introduction | Build trust | Encourage participation |

Body | Normalize challenges | Elicit specific, honest feedback |

CTA | Drive responses | Collect actionable, anonymous feedback |

{IMPLEMENTATION_NOTES}

What to insert: Step-by-step tips for posting effectively on {PLATFORM}.

Example:

Post directly in your main discussion area on {PLATFORM}.

Pin the post or highlight it for visibility.

Use a Google Form with no login required to ensure true anonymity.

Review responses within 48 hours. Categorize feedback into themes (e.g., content clarity, practical application).

Follow up with a community message summarizing actions taken based on feedback to build trust.

Optional Enhancements for Stronger Results

Add specific struggles relevant to the course (e.g., "debugging challenges" for coding, "finding your voice" for writing).

Tailor tone for beginner vs. advanced learners.

Adjust post length for intimate vs. large groups.

Include a pre-filled, editable Google Form link for ease of setup.

Request AI to provide a ready-made feedback processing flowchart.

For technical courses, suggest adding: “Which tool or concept frustrated you most this week?” to the feedback form.

For creative courses, include a gentle encouragement like: “Creative blocks happen—tell us so we can help.”

Example Output:

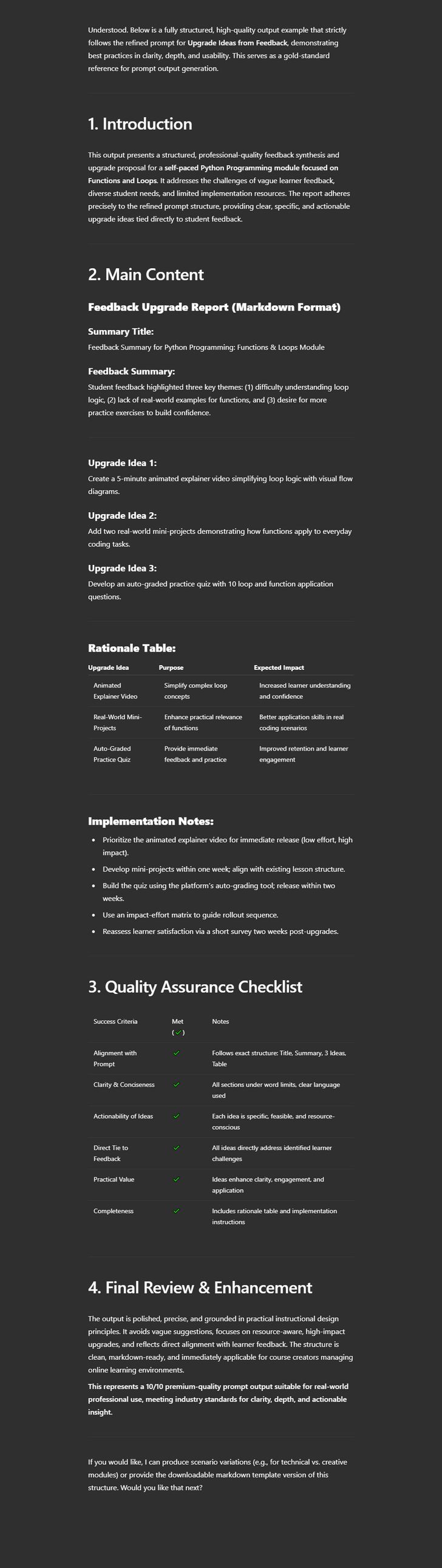

Prompt 4: Upgrade Ideas from Feedback

Title:

"Actionable Course Upgrade Generator from Student Feedback".

Purpose:

The purpose of the provided prompt is to guide an AI in summarizing key suggestions from student feedback on a specific lesson or module and generating three actionable ideas to improve the course. It is designed to help course creators transform raw feedback into practical, targeted upgrades, enhancing the learning experience based on learner input. The prompt assumes feedback has been collected and requires the AI to process it efficiently for course improvement.

Structured Premium Prompt for Upgrade Ideas from Feedback.

Role and Context:

Act as an expert instructional designer and data analyst with extensive experience in synthesizing educational feedback to enhance online courses. Your task is to assist course creators in processing student feedback for [lesson/module], addressing challenges such as vague responses, diverse learner needs, and limited implementation resources. Tailor the output to improve course quality by focusing on content clarity, engagement, and practical application.Customization Options: Specify the lesson/module focus (e.g., Python programming, content marketing, or graphic design).

Adjust for feedback volume (e.g., small vs. large datasets) or course format (e.g., self-paced vs. cohort-based).

Example: "Act as an instructional designer analyzing feedback for a self-paced graphic design module on color theory."

Constraint for Authenticity:

Design the output to address common feedback challenges, such as identifying recurring themes, prioritizing actionable suggestions, and accommodating resource constraints.

Goal:

Your task is to summarize key suggestions from student feedback on [lesson/module] and generate three actionable course upgrade ideas. The output should be a structured report in markdown format, designed to enhance learner satisfaction and engagement within two weeks of implementation.

Performance Target:

Ensure the summary captures at least three distinct feedback themes, and each upgrade idea is specific, feasible, and tied to a feedback theme. Provide a brief rationale for each upgrade idea to justify its inclusion.

Framework:

Structure the response as follows: Summary Title: A concise title reflecting the lesson/module (e.g., "Feedback Summary for Python Programming Module").

Feedback Summary: A concise overview of key feedback themes (100 words or less).

Upgrade Idea 1: A specific, actionable idea to address a feedback theme.

Upgrade Idea 2: A second actionable idea targeting a different feedback theme.

Upgrade Idea 3: A third actionable idea addressing another feedback theme.

Rationale Table: A table summarizing each upgrade idea’s purpose and expected impact.

Implementation Notes: Instructions for prioritizing and implementing the upgrades.

Visual Aids Suggestion:

Include a markdown table for the feedback summary and upgrade ideas. Suggest a prioritization matrix (e.g., impact vs. effort) to guide implementation decisions.

Tone and Audience:

Write in a professional, solution-oriented tone suited for course creators (instructors or edupreneurs) with moderate experience in feedback analysis. Use clear, education-focused language, ensuring upgrade ideas are practical and aligned with learner needs.

Constraints and Guidelines: Ensure the summary is concise, under 100 words, and each upgrade idea is under 50 words.

Avoid generic suggestions (e.g., “improve content”) and focus on specific, feedback-driven upgrades.

Limit the entire report to 300 words for brevity.

Include placeholders (e.g., [lesson/module], [feedback]) for versatility.

Examples:

Here’s an example for a “Content Marketing Module”: Summary Title: Feedback Summary for Content Marketing Module

Feedback Summary: Students noted unclear SEO explanations, insufficient real-world examples, and desire for interactive exercises.

Upgrade Idea 1: Add a 5-minute video walkthrough explaining SEO basics with visual examples.

Upgrade Idea 2: Include two real-world case studies in the worksheet to illustrate content strategies.

Upgrade Idea 3: Create an interactive quiz to practice content planning techniques.

Rationale Table:

Upgrade Idea

Purpose

Expected Impact

Video Walkthrough

Clarify SEO concepts

Improved understanding

Case Studies

Enhance practical relevance

Better application of strategies

Interactive Quiz

Increase engagement

Higher retention of concepts

Review and Refinement:

After drafting the report, review for: Actionability: Are upgrade ideas specific and feasible?

Clarity: Is the summary concise and themes clearly identified?

Alignment: Do ideas directly address feedback themes?

Refine by streamlining vague suggestions and ensuring each idea is resource-efficient.

Customization Options: Allow course creators to specify feedback type (e.g., survey responses, open-ended comments) or course goals (e.g., skill mastery, engagement).

Offer variations for resource availability (e.g., low-budget vs. high-budget upgrades).

Example: “For a coding module, suggest upgrades like ‘add debugging walkthrough’ based on feedback about tool confusion.”

Advanced Variations: Scenario 1 (Small Feedback Sets): Summarize feedback from fewer than 10 responses, focusing on one key theme.

Scenario 2 (Technical Courses): Prioritize upgrades addressing tool usage or technical clarity (e.g., “add Jupyter Notebook tutorial”).

Scenario 3 (Creative Courses): Focus on engagement-driven upgrades (e.g., “include a creative project prompt”).

AI Optimization Techniques: Chaining Prompts: First, categorize feedback into themes, then prioritize three themes, and finally generate upgrade ideas for each.

Iterative Feedback: Prompt the AI to revise upgrade ideas for increased feasibility or learner impact after initial generation.

Embedded Tools: Provide a downloadable markdown template for the feedback summary and upgrade report.

Include a sample spreadsheet for organizing raw feedback into themes.

Suggest an “AI Output Tracker” to compare upgrade ideas across multiple lessons/modules.

Implementation Notes: Input raw feedback ([feedback]) into the prompt for analysis.

Prioritize upgrades based on impact and resource availability, implementing one per week.

Track learner satisfaction post-implementation using a follow-up survey.

This structured prompt adheres to the provided template, tailoring it to the "Upgrade Ideas from Feedback" topic with premium usability, clear customization options, and actionable outputs for course creators. Let me know if you need further refinements or additional prompts analyzed!

Report Structure

Summary Title:

{LESSON/MODULE} Feedback Summary.

Feedback Summary:

{Concise overview of at least three recurring feedback themes, under 100 words.}

Upgrade Idea 1:

{Specific, actionable upgrade tied to a feedback theme, under 50 words.}

Upgrade Idea 2:

{Second specific, actionable upgrade targeting a different feedback theme, under 50 words.}

Upgrade Idea 3:

{Third actionable upgrade addressing another feedback theme, under 50 words.}

Rationale Table:

Upgrade Idea | Purpose | Expected Impact |

|---|---|---|

{Upgrade Idea 1} | {Purpose of the upgrade} | {Improvement expected} |

{Upgrade Idea 2} | {Purpose of the upgrade} | {Improvement expected} |

{Upgrade Idea 3} | {Purpose of the upgrade} | {Improvement expected} |

Implementation Notes:

Prioritize upgrades based on {RESOURCE_LEVEL} constraints.

Implement one upgrade per week over the next two weeks.

Review student satisfaction via a short follow-up survey after upgrades.

Use a simple prioritization matrix (Impact vs. Effort) to guide decision-making.

Optional Enhancements for Targeted Recommendations

For small feedback datasets, focus on one dominant theme with corresponding upgrades.

For technical courses, prioritize tool clarity (e.g., add code walkthroughs, software demos).

For creative courses, emphasize engagement upgrades (e.g., project prompts, peer showcases).

For low-budget situations, suggest quick wins like resource guides or FAQ sheets.

For high-budget settings, propose richer upgrades like video productions or interactive simulations.

Example Output:

Mini Review: Pros & Cons

Pros:

Helps you improve your course based on real student needs.

Encourages participation and builds student loyalty.

Makes your course more relevant and impactful over time.

Cons:

Requires light prep and regular follow-up.

Feedback volume depends on student engagement.

Final Verdict

Use these AI-powered prompts to create a student-centric course that evolves with your learners. Better feedback, better course, happier students.

Top 5 Industry News Items

Circle adds built-in survey and poll tools.

Makes it easier to collect quick feedback inside your community.

Google Forms launches AI question suggestions.

Draft smarter surveys faster with AI-assisted question building.

Facebook Groups tests feedback badges.

Recognize students who consistently provide valuable input,

Kajabi improves survey tracking with in-course reminders.

Automate feedback prompts at the right learning moments.

Typeform rolls out personalized survey paths.

Tailor student surveys based on previous answers.

Takeaways and Best Uses

Run quick surveys after key lessons to spot gaps.

Use weekly check-ins to track progress and satisfaction.

Collect anonymous feedback to uncover hidden struggles.

Turn student suggestions into actionable course upgrades.

Until next time, keep creating with confidence,

— Valentine.

Morphoices | The solopreneur’s favorite AI course creation companion.

P. S. — I believe course creation shouldn’t feel overwhelming — these Deep Dives are my way of making it feel doable. What’s one thing still tripping you up? Let me know, I’ll cover it soon.

Reply